Onderzoeksmethoden 2/het werk/2010-11/Groep03

Group Members

Inhoud

- 1 Introduction

- 2 Execution

- 3 Conclusions

- 3.1 To what extent does the users computer experience influences the successfulness of ordering and configuring domain names?

- 3.2 Which parts of the system cause problems or delays during the ordering and configuring of the domain names?

- 3.3 Is the system helpful and efficient enough for customers to configure and order domain names?

- 3.4 General Findings

- 3.5 Technical users

- 3.6 Non-Technical users

- 4 Reflection

- 5 Sources

Introduction

Nowadays it is more and more common for a company to have a web shop. In this research we will focus on the web shop from the company Exonet, which offers IT related services. In their order process you'll have to deal with complicated steps which are crucial for the order. There are a lot of potential customers that are lacking the knowledge of the whole system behind ordering a domain. This system contains some complicated options for reaching every persons satisfaction. Therefor the usability of a web shop could enhance the chance of people steering in the right direction; complete the order.

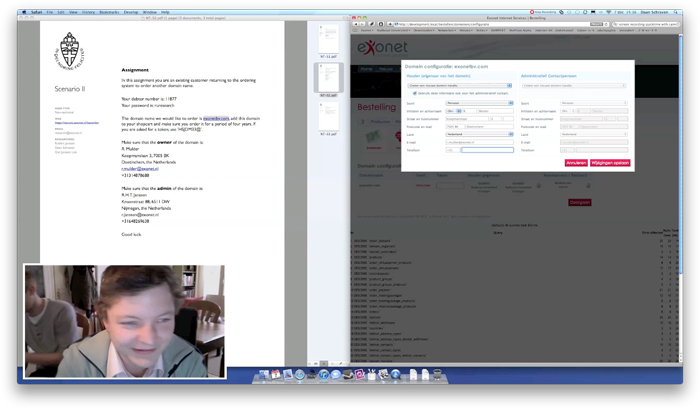

In the screenshot below you find the configuration page of the Exonet ordering system.

Problem statement

There are countless companies who offer the same services as Exonet. And it is our task to ensure that we need to reach all those customers, beginners or experts in this area. Recently a new order system has been launched and to ensure it is understandable for all users, this research has started.

The online order system is mainly used to register new and/or transfer existing domain names. Customers are able to log in to this system and use their personal data to register new domains. Besides registering or transferring domains, it is also possible to order webhosting packages, virtual private servers and much more. Since the order process for domains is of most importance, we will focus on only this aspect of the order system.

So the new order system has recently replaced the old order system. This new system has more options and needs to fulfill the satisfaction of all existing and new customers. There are specific parts in the system which we think are too complicated and need to be improved. Some parts of the ordering proces involve configuring a domain name, creating contacts for this domain name, setting nameservers, creating redirects and forwarding mail. Of course these options aren't mandatory, but many customers requested these configuration options which saves them and the company a lot of time.

The problem is that many customers register multiple domains names at the same time, and most of the time each of these domain names requires a specific configuration. We tried to combine these configuration options in a single popup which might need improvement. Therefor the focus of this Think Aloud Protocol will be on this part of the system, specifically on the efficiency and the helpfulness of the system.

This leads to our main research question:

Is the system helpful and efficient enough for customers to configure and order domain names?

To answer this questions we have to answer the following subquestions:

- To what extent does the users computer experience influences the successfulness of ordering and configuring domain names?

- Which parts of the system cause problems or delays during the ordering and configuring of the domain names?

Method

The method used for our research to analyze a persons behavior is the Think Aloud Protocol.

We set up the following steps to complete our research:

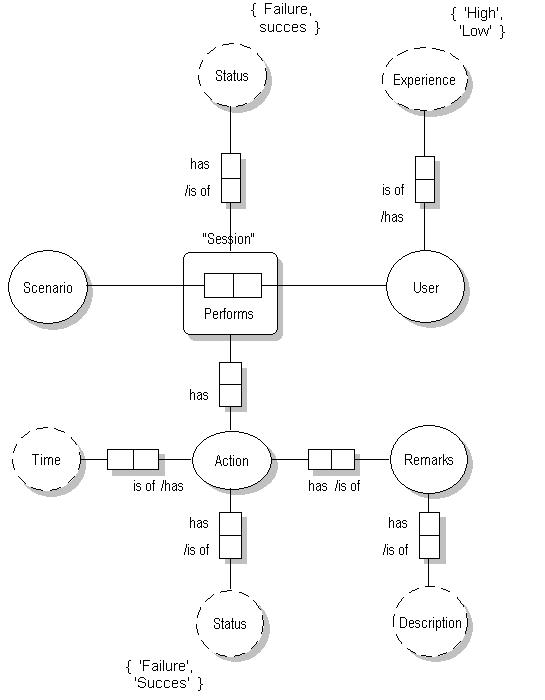

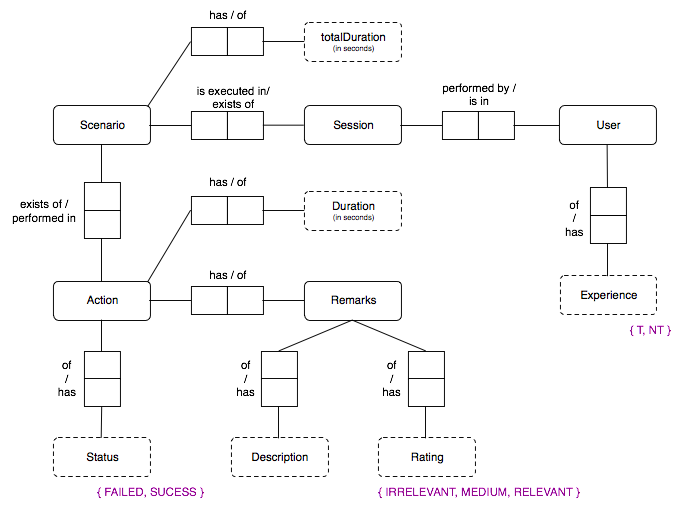

Step 1: Create a conceptual model

First we brainstorm about which data we will need for our research and how we can tackle the problem. This brainstorm session will lead to a lot of information which will output a ORM diagram to describe our input, output and analysis. This ORM diagram will be our main tool to define how we analyze a person's behavior and what data we will save. The diagram is focused on helpfulness and efficiency.

Step 2: Find users to participate

For our setup we would like a total of four users to participate, two technical and two non-technical users. This way we can measure the results in difference of experience level. We hope to see a significant difference in speed between technical and non-technical users. Furthermore we hope to see an improvement in task completion over time with all the test persons.

Step 3: Describe scenarios

Six different scenarios will be described for test persons to execute, each user will execute two or three scenarios (depending on the execution time). These scenarios will include very simple, advanced and hard tasks. For these scenarios we will take a look at the last 20 orders and see if our scenarios are too simple or too advanced. After comparing our scenarios, they are ready to be executed.

Step 4: Execute scenario and collect data

To keep track of the actions during the execution of a scenario, we will use the functionality of QuickTime on an iMac. QuickTime supports to record the screen and at the same time record the audio input. To make sure we also capture the facial expressions, we will start the QuickTime webcam record. After this we use the webcam video as picture in picture in the screen recording (see image right). Now we can translate the video recordings to text. For the location we use this equipment at home.

Step 5: Organize the data

After the execution of the scenarios we will organize the data according to the ORM diagram. We will save this data in a database for analyzing purposes later. To enter data into this database we've created a small web application with lots of forms. We've decided on how we will describe actions to make sure we all analyze the data the same way. This way the data will be consistent. We've added option fields for ratings, remarks and scenarios with selectable values to reduce the chance for human errors.

Step 6: Analyze the data

When all the data is added in the database we will use the same web application to analyze it. We will generate formulas to calculate how many actions are successful and how many relevant remarks there are throughout the sessions. Furthermore we can easily print out the failed actions and their remarks to see where it went wrong and how we can improve the system.

Step 7: Complete the research and write a report

When we have a clear vision from our analyzed data, we are able to complete the research and write the conclusions regarding the order system. These conclusions consist of tips to improve the system and shows which pages causes a lot of problems with the users. The data will support these tips and remarks.

Planning

| Week # | Action(s) |

|---|---|

| Week 42 | Define subject and research question |

| Week 43 | Brainstorm about the research plan |

| Week 44 | Work out the research plan and ORM model |

| Week 45 | Update the wiki and start with describing scenarios |

| Week 46 | Recruit users and finalize scenarios |

| Week 47 | Execute the scenarios with the users using TAP |

| Week 48 | Process data |

| Week 49 | Data analyzing |

| Week 50 | Data analyzing and concept report |

| Week 51 | Finalize report |

Execution

Conceptual model

The following scheme is the initial ORM. During the execution of the think aloud protocols and processing of the data we made some additions. These can be found in the reflection.

| Click 'show' to view the initial ORM scheme → |

|---|

Below you will find our latest ORM scheme.

Participants

We are going to test this on people which have experience in this sector and who don't. For the research we used 4 participants, 2 of each group. So there will be one session with 4 different users.

Group 1 - Technical users

Bard Duijs

Laurens Alers

Group 2 - Non technical users.

Ivy Raaijmakers

Martijn Stege

Scenarios

Execution of the scenarios are planned for 07/12/2010.

To keep track of the actions during the execution of a scenario, we will use the functionality of QuickTime on an iMac. QuickTime supports to record the screen and at the same time record the audio input. To make sure we also capture the facial expressions, we will start the QuickTime webcam record. After this we use the webcam video as picture in picture in the screen recording (see image right). Now we can translate the video recordings to text. For the location we use this equipment at home.

The following scenarios are based on the exonet order page. We made three scenarios for non-technical users and three for technical users:

Non-technical users

| Scenario | Name | File |

|---|---|---|

| 1 | NT-S1 | Bestand:NT-S1.pdf |

| 2 | NT-S2 | Bestand:NT-S2.pdf |

| 3 | NT-S3 | Bestand:NT-S3.pdf |

Technical users

| Scenario | Name | File |

|---|---|---|

| 1 | T-S1 | Bestand:T-S1.pdf |

| 2 | T-S2 | Bestand:T-S2.pdf |

| 3 | T-S3 | Bestand:T-S3.pdf |

Notes

- While executing the think aloud protocols, we made the the choice to use 4 participants instead of 6. Transcribing and analyzing the data seems like a lot more work then we expected. (30/12/2010)

Data structurering

We will save the data in xml format using the following structure (according to the ORM scheme).

<?xml version="1.0" encoding="UTF-8" ?>

<session id="1"> id= unique session id

<user exp="T">Users Name</user> experience is T (technical) or NT (non technical)

<scenarios>

<scenario id="1" name="T-S1"> id = unique scenario id, name = document name which they executed

<actions>

<action id="1"> id = unique action id

<description>description goes here</description> description of the scenario

<status>success</status> Status is 'success' or 'failed'

<duration>24</duration> duration in seconds

<page>domain-check</page> the page on which the action is executed

<remarks>

<remark id="57" rating="2">Remark goes here</remark> id = unique remark id, 'rating = 1 (irrelevant), 2 (medium), 3 (relevant)

<remark id="58" rating="1">Some other remark</remark>

</remarks>

</action>

</actions>

<totalDuration>185</totalDuration> Total duration of the scenario in seconds

</scenario>

</scenarios>

</session>

| Click 'show' to view the initial xml structure → |

|---|

<session id=""> id= unique session id

<user name="" exp=""></user> name = the users name, experience is T (technical) or NT (non technical)

<scenario id=""> id = the id of the scenario

<actions>

<action>

<description></description> A description of the action performed

<remarks>

<remark rating=""></remark> Multiple remarks can be stored within an action, value is a rating from 1 - 3 of importance

</remarks>

<status></status> Status can be success or failed

<duration></duration> The duration of the action in seconds

</action>

</actions>

</scenario>

<totalDuration></totalDuration> The total duration of the scenario in seconds

</session>

|

Notes

- While structuring the data we decided it would come in handy to add a rating to the remarks of the user. (30/11/2010)

- While analyzing the data we changed the structure into the a new, improved version which holds more data. The old one is still shown on the page (30/12/2010)

- After our presentation on 13/01/2011 we've had a feedback comment about the rating terms we used, we decided on changing them into irrelevant, medium and relevant.

Data analysis

Method for Transcribing the data

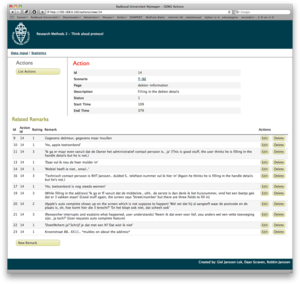

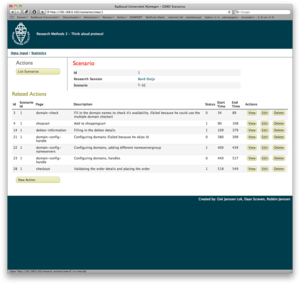

To transcribe all the data we gathered during the Think Aloud Protocol, we created a custom tool (in PHP) instead of editing manual in the XML. This works more efficient and makes us not forget adding any information. Besides adding, modifying and deleting the tool comes in handy for generating statistics for our conclusion. We've taken three screenshots from our tool, which are displayed below (clickable).

A part of the transcribing our data is adding a rating to a remark of a user. This rating is fairly important because during a Think Aloud Session a lot of things are said. A lot of relevant things are said but a lot of nonsense is told as well. We decided on when a remark is 'Irrelevant', 'Medium' and 'Relevant' by checking if it relates to the system, and furthermore how it relates to the system. It could be something like "I don't understand this" which might be relevant, or something like "If you add x to this screen, that would come in handy!". After we rated all the remarks we went on and check all the remarks we rated relevant and decided with the three of us wether it was really relevant or not.

The images below from left to right:

- An overview of all the Remarks within an Action

- An overview of all the Actions within a Scenario

- The page we used to add remarks to an Action

XML Data

| Click 'show' to view the xml data → |

|---|

<?xml version="1.0" encoding="UTF-8" ?>

<session id="7">

<user exp="T">Laurens Alers</user>

<scenario id="2" name="T-S1">

<actions>

<action id="39">

<description>Logging in as the debtor provided</description>

<status>success</status>

<duration>24</duration>

<page>domain-check</page>

<remarks>

<remark id="57" rating="2">'Debtornumber.. password, nou debiteur' (Means the user sees the login form, which is good!)</remark>

<remark id="58" rating="1">'Waar is de keypad?'</remark>

<remark id="59" rating="2">'Okay, ingelogd' (Action successful)</remark>

</remarks>

</action>

<action id="41">

<description>Checking the domains for availability</description>

<status>success</status>

<duration>34</duration>

<page>domain-check</page>

<remarks>

<remark id="60" rating="1">'Ik heb al een domein, en wil nog een nieuwe registreren'</remark>

<remark id="61" rating="2">'Het domein... ff kijken, ru-research.nl.. controleer' (User finds the button, good stuff)</remark>

<remark id="62" rating="3">'Beschikbaar' (User sees that difference between available domains and used domains)</remark>

<remark id="64" rating="3">'Registratie.. 1 jaar.. 2 jaar moet ik hem hebben' (Can't change it to 2 years here, but the user thinks he can. Continues anyway)</remark>

</remarks>

</action>

<action id="44">

<description>Add to shoppingcart</description>

<status>success</status>

<duration>22</duration>

<page>shopcart</page>

<remarks>

<remark id="68" rating="1">'Toevoegen aan winkelwagen'</remark>

<remark id="69" rating="3">'Oke.. termijn.. per 2 jaar, oke ziet er prima uit' (User understands that he can change the term here, good stuff)</remark>

<remark id="71" rating="3">'De owner..? Nouja, doorgaan' (User thinks he can change the handles here, but decides to continue anyway)</remark>

</remarks>

</action>

<action id="45">

<description>Changing the handles</description>

<status>success</status>

<duration>32</duration>

<page>domain-config-handle</page>

<remarks>

<remark id="73" rating="1">'RAD007.. de admin en de owner, nou dat klopt dus niet..' (User understands the screen and sees that it is incorrect)</remark>

<remark id="74" rating="3">'Bewerken..' 'RAD008 moet de houder zijn' (User click on select box immediately which is good)</remark>

<remark id="75" rating="2">'De admin..' 'Nou uitvinken dan maar.. ja!' (User deactivates the "copy owner to admin" checkbox) 'RAD0007, nou dat klopt allemaal wel'</remark>

</remarks>

</action>

<action id="48">

<description>Attaching different nameservers</description>

<status>success</status>

<duration>56</duration>

<page>domain-config-nameservers</page>

<remarks>

<remark id="77" rating="2">'nameservers.. redirect' (users finds the edit button)</remark>

<remark id="79" rating="3">'hmm.. bestaande nameserver, ah nieuwe nameserver aanmaken'</remark>

<remark id="80" rating="1">'Oke nou.. (fills in details)' 'tweede nameservers, nou derde hoeft niet, wijzigingen opstaan'</remark>

<remark id="81" rating="1">'Nou, good luck, doorgaan!'</remark>

</remarks>

</action>

<action id="50">

<description>Check order details and placing order</description>

<status>success</status>

<duration>17</duration>

<page>checkout</page>

<remarks>

<remark id="82" rating="2">'Nou, zal allemaal wel kloppen ik ga akkoord'</remark>

<remark id="83" rating="1">'Prima, BAM, freaking easy' (user is done)</remark>

</remarks>

</action>

</actions>

</scenario>

<totalDuration>185</totalDuration>

</session>

<session id="6">

<user exp="T">Bard Duijs</user>

<scenario id="1" name="T-S2">

<actions>

<action id="3">

<description>Fill in the domain names to check it's availability. (failed because he could use the multiple domain checker)</description>

<status>failed</status>

<duration>55</duration>

<page>domain-check</page>

<remarks>

<remark id="2" rating="2">'Add to shoppingcart' (means he found the right button)</remark>

</remarks>

</action>

<action id="4">

<description>Add to shoppingcart</description>

<status>success</status>

<duration>18</duration>

<page>shopcart</page>

<remarks>

<remark id="3" rating="3">'Make sure the owner.. Nou volgens mij kan ik dat hier niet instellen lijkt' (Wonders if he can change the handles to the right handles in this screen already)</remark>

<remark id="8" rating="3">'Voelt wel een beetje risky aan van zou ik dat niet hier moeten doen?' (Wonders if he can change this later on in the system)</remark>

</remarks>

</action>

<action id="14">

<description>Filling in the debtor details</description>

<status>success</status>

<duration>270</duration>

<page>debtor-information</page>

<remarks>

<remark id="9" rating="1">Gegevens debiteur, gegevens maar invullen</remark>

<remark id="10" rating="1">'Ho, apple toetsenbord'</remark>

<remark id="11" rating="3">'Ik ga er maar even vanuit dat de Owner het administratief contact persoon is.. ja' (This is good stuff, the user thinks he is filling in the handle details but he is not.)</remark>

<remark id="13" rating="1">'Daar vul ik nou de heer mulder in'</remark>

<remark id="14" rating="1">'Mobiel heeft ie niet.. email..'</remark>

<remark id="16" rating="3">'Technisch contact persoon is RHT Janssen.. dubbel S.. telefoon nummer vul ik hier in' (Again he thinks he is filling in the handle details but he's not.)</remark>

<remark id="17" rating="1">'Ho, toetsenbord is nog steeds wennen'</remark>

<remark id="19" rating="3">(While filling in the address) 'Ik ga er ff vanuit dat de middelste.. uhh.. de eerste is dan denk ik het huisnummer, vind het een beetje gek dat er 3 vakken staan' (Good stuff again, the screen says 'Street/number' but there are three fields to fill in)</remark>

<remark id="20" rating="2">(Apple's auto complete shows up on the screen which is not suppose to happen) 'Wel vet dat hij al aangeeft waar de postcode en de plaats is, oh, hoe komt hier die 3 terecht?' 'En het klopt ook niet, dat scheelt ook'</remark>

<remark id="21" rating="3">(Researcher interrupts and explains what happened, user understands) 'Neem ik dat even voor lief, zou anders wel een vette toevoeging zijn.. ja toch?' (User requests auto complete feature)</remark>

<remark id="22" rating="1">'DoetiNchem ja? Schrijf je dat met een N? Dat wist ik niet'</remark>

<remark id="23" rating="1">Kroonstraat 88.. 6511.. *mubles on about the address*</remark>

</remarks>

</action>

<action id="21">

<description>Configuring domains (Failed because he skips it)</description>

<status>failed</status>

<duration>19</duration>

<page>domain-config-handle</page>

<remarks>

<remark id="26" rating="3">'Oke, de houder moet ik nou als het goed is, denk ik, waarom is hij nou niet ingevuld? Dat snap ik niet.' (User skips to next action)</remark>

</remarks>

</action>

<action id="22">

<description>Configuring domains, adding different nameservergroup</description>

<status>success</status>

<duration>39</duration>

<page>domain-config-nameservers</page>

<remarks>

<remark id="29" rating="1">'Oke wacht ff, laten we eerst maar ff de nameserver van het duitse domein'</remark>

<remark id="30" rating="3">'Nieuw nameservergroup' (User understand the select box!)</remark>

<remark id="32" rating="2">'Ik moet 3 nieuwe nameservers aanmaken, ik gebruik de naam duits' 'nameserver 1, nameserver 2 exonet opslaan'</remark>

<remark id="33" rating="3">'Wijzigingen opslaan? Ik heb niks gewijzigd ik voeg wat nieuws toe dus dat vind ik opzicht een beetje een rare naam'</remark>

</remarks>

</action>

<action id="23">

<description>Configuring domains, handles</description>

<status>failed</status>

<duration>77</duration>

<page>domain-config-handle</page>

<remarks>

<remark id="36" rating="2">'Oke dat ging wel goed, ik vind alleen dit (points at owner handle with mouse) nog steeds raar waarom staat dit nog op dat oude?' (Finds edit screen of the handle, but with a bit of luck)</remark>

<remark id="37" rating="3">'Volgens mij heb ik net, oh oke die vallen nu al onder mijn bedrijf dat is opzicht ook wel.. beetje typisch, vind ik, ik kan het ook niet wijzigen' (User clicks irritating, not clear that a handle is created using the debtor details)</remark>

<remark id="39" rating="3">'Dus sowieso als ik een domein registreer moet het administratief en de houder altijd bij mijzelf.. bij mijn bedrijf werken denk ik dan?' (Unclear that he can create new handles)</remark>

<remark id="40" rating="2">'Gebruik deze informatie..' (User finds the button to copy information from owner handle to admin handle)</remark>

<remark id="41" rating="3">'Ja ik kan wel weer een nieuwe gaan creeeren, maar goed ik denk dat dat in dit geval niet..' (User fails to create a new handle for the different owner/admin)</remark>

<remark id="42" rating="3">'Oke token heb ik in geen van de gevallen nodig, waarom staat die hier nog?' (User has a point, no transfers with needed tokens are orderd, why show the field?)</remark>

</remarks>

</action>

<action id="28">

<description>Validating the order details and placing the order</description>

<status>success</status>

<duration>31</duration>

<page>checkout</page>

<remarks>

<remark id="45" rating="2">(User finds the general conditions button, that's a good thing)</remark>

<remark id="47" rating="1">'Oke, ik ben klaar'</remark>

</remarks>

</action>

</actions>

</scenario>

<totalDuration>509</totalDuration>

</session>

<session id="8">

<user exp="NT">Ivy Raaijmakers</user>

<scenario id="3" name="NT-S1">

<actions>

<action id="13">

<description>Check availability domain daanschraven.nl and add to shopcart. (failed because should have added all three domain names)</description>

<status>failed</status>

<duration>39</duration>

<page>domain-check</page>

<remarks>

<remark id="7" rating="3">Ivy: "He, deze is al in gebruik. Moet ik gewoon hierheen? Ik moet dit toch aan jouw vragen ofniet?" Daan: "Nee ik mag niks zeggen, je moet doen wat daar staat." Ivy: "Ja maar die doet het toch al."</remark>

</remarks>

</action>

<action id="16">

<description>Going to next step after shopcart, where she finally understands what she did wrong.</description>

<status>success</status>

<duration>32</duration>

<page>domain-check</page>

<remarks>

<remark id="12" rating="3">Ivy: "Haha, dit is niet leuk. Ja.. ik snap het niet."</remark>

<remark id="31" rating="3">Ivy: "Ow wacht!" She understands what she did wrong.</remark>

</remarks>

</action>

<action id="17">

<description>Adding the domains gieljanssenlok.nl to the shopcart. (failed because again only added one domain)</description>

<status>failed</status>

<duration>18</duration>

<page>domain-check</page>

<remarks>

<remark id="18" rating="1">Ivy: "Die zal ook wel in gebruik zijn. Ow."</remark>

</remarks>

</action>

<action id="18">

<description>Add the domain robbinjanssen.nu to the shopcart.</description>

<status>success</status>

<duration>17</duration>

<page>domain-check</page>

<remarks>

</remarks>

</action>

<action id="20">

<description>Filling down the personal information fields.</description>

<status>success</status>

<duration>113</duration>

<page>debtor-information</page>

<remarks>

<remark id="25" rating="1">Ivy: "Moet ik dat nou helemaal gaan invullen?"</remark>

<remark id="27" rating="1">Ivy: "Kan je niet gewoon blablablabla doen?"</remark>

<remark id="28" rating="3">Ivy: "Ahh" She clicked the copy information buttons, this saves work.</remark>

</remarks>

</action>

<action id="24">

<description>Domain configuration (failed because she thought she was finished with the first assignment).</description>

<status>failed</status>

<duration>15</duration>

<page>domain-config</page>

<remarks>

<remark id="34" rating="3">Daan: "Nee nee, nog gewoon de eerste, je moet nog doorgaan."</remark>

<remark id="35" rating="1">Ivy: "We moeten hem verhuizen toch?"</remark>

<remark id="38" rating="1">Daan: "Je moet gewoon verder gaan met de eerste assignment"</remark>

</remarks>

</action>

<action id="27">

<description>Domain configuration (failed because she doesn't understand what to fill in the token field)</description>

<status>failed</status>

<duration>16</duration>

<page>domain-config</page>

<remarks>

<remark id="43" rating="3">Ivy: "Ja maar ik kan dit toch niet?" (fill down token)</remark>

<remark id="44" rating="2">Daan: "Dan moet je de tekst lezen."</remark>

<remark id="46" rating="2">Daan: "Nee." (she clicked the wrong place, i helped a little)</remark>

</remarks>

</action>

<action id="30">

<description>Domain configuration - Add the token</description>

<status>success</status>

<duration>32</duration>

<page>domain-config</page>

<remarks>

<remark id="48" rating="3">Ivy: "Dus die moet gewoon weg" (she doesn't understand)</remark>

<remark id="49" rating="3">Ivy: "Owja! Ow okay" (she understands now where to fill down the token)</remark>

</remarks>

</action>

<action id="31">

<description>Order checkout (failed because she did not agree to the terms and conditions)</description>

<status>failed</status>

<duration>6</duration>

<page>checkout</page>

<remarks>

</remarks>

</action>

<action id="32">

<description>Order checkout</description>

<status>success</status>

<duration>9</duration>

<page>checkout</page>

<remarks>

<remark id="50" rating="1">Daan: "Okay, dat was opdracht 1."</remark>

</remarks>

</action>

</actions>

</scenario>

<totalDuration>297</totalDuration>

<scenario id="4" name="NT-S2">

<actions>

<action id="34">

<description>Adding exonetbv.com to the shopcart.</description>

<status>success</status>

<duration>17</duration>

<page>domain-check</page>

<remarks>

</remarks>

</action>

<action id="35">

<description>Change the term from one year to four years on shopcart.</description>

<status>success</status>

<duration>10</duration>

<page>shopcart</page>

<remarks>

</remarks>

</action>

<action id="36">

<description>Signing in (failed because she is going to create a new debtor)</description>

<status>failed</status>

<duration>27</duration>

<page>debtor-information</page>

<remarks>

<remark id="52" rating="2">Daan: "Goed the opdracht doorlezen." Needed to help because it was not the assignment</remark>

<remark id="53" rating="3">Ivy: "Huh, maar dit moet toch de owner zijn?" (she points out that she doesn't haven't all the information)</remark>

<remark id="54" rating="3">Ivy: "Owja" She found what needed to be done.</remark>

</remarks>

</action>

<action id="38">

<description>Signing in</description>

<status>success</status>

<duration>39</duration>

<page>debtor-information</page>

<remarks>

<remark id="55" rating="1">Ivy: "Ow, geen idee of het wachtwoord nu goed is"</remark>

</remarks>

</action>

<action id="40">

<description>Filling down the token for exonetbv.com</description>

<status>success</status>

<duration>17</duration>

<page>domain-config</page>

<remarks>

</remarks>

</action>

<action id="42">

<description>Domain configuration (failed, she is in order checkout and finds out that she does not have the owner and admin with the correct information. She clicks on the shopcart to go back. Again she clicks the domain configuration (good page) on the next button and is back at checkout.</description>

<status>failed</status>

<duration>70</duration>

<page>domain-config-handle</page>

<remarks>

<remark id="63" rating="3">Ivy: "Maar nu weet ik toch niet of dit klopt?" (the handle information for the domains)</remark>

<remark id="65" rating="3">Ivy: "Nee." (She finds out the shopcart isn't the place to change that information)</remark>

<remark id="67" rating="2">Daan: "Nee, je bent nog iets vergeten." (I had to help, because she was about to finish the order)</remark>

<remark id="70" rating="3">Ivy: "Wat dan, ik zie.. ik weet dat toch niet." (referring to changing the handle information)</remark>

<remark id="72" rating="3">Ivy: "Dit zeker" (referring to invoice address) Daan: "Nee." (pointing out that it is not what she needs)</remark>

<remark id="76" rating="3">Ivy: "Ja maar waar kan ik dat vinden? Ik zie dat niet zo goed." Daan: "Bekijk alles eens goed." Ivy: "Ow, ik denk dat ik het al weet" (she finds out the configuration page is the place to be)</remark>

</remarks>

</action>

<action id="49">

<description>Domain configuration (failed because she hit the nameservers edit button)</description>

<status>failed</status>

<duration>14</duration>

<page>domain-config-handle</page>

<remarks>

</remarks>

</action>

<action id="51">

<description>Domain configuration</description>

<status>success</status>

<duration>155</duration>

<page>domain-config-handle</page>

<remarks>

<remark id="86" rating="3">Ivy: "Ik snap dat echt niet hoor, ik weet niet wat ik moet doen"</remark>

<remark id="87" rating="3">Ivy: "Hoe kom ik bij deze gegevens dan?" (referring to the handle information on the assignment)</remark>

<remark id="88" rating="1">Daan: "Dan ga maar door."</remark>

<remark id="89" rating="3">Ivy: "Hoe kan ik dat wijzigen?" Daan: "Zoek naar een wijzig icoontje." (again a little help..)</remark>

<remark id="90" rating="3">Ivy: "Ow hier, omdat het nu van de Radboud is.. moet ik dat wijzigen."</remark>

</remarks>

</action>

<action id="52">

<description>Order checkout</description>

<status>success</status>

<duration>15</duration>

<page>checkout</page>

<remarks>

</remarks>

</action>

</actions>

</scenario>

<totalDuration>661</totalDuration>

<scenario id="5" name="NT-S3">

<actions>

<action id="53">

<description>Signing in</description>

<status>success</status>

<duration>18</duration>

<page>debtor-information</page>

<remarks>

<remark id="93" rating="1">Ivy: "Ja maakt niet uit, moet ik dat nu eerst invullen."</remark>

</remarks>

</action>

<action id="54">

<description>Adding domain ruresearch.nl to the shoppingcart</description>

<status>success</status>

<duration>22</duration>

<page>domain-check</page>

<remarks>

</remarks>

</action>

<action id="56">

<description>Change the term from one year to two years in shopping cart.</description>

<status>success</status>

<duration>4</duration>

<page>shopcart</page>

<remarks>

</remarks>

</action>

<action id="57">

<description>Domain configuration (changing domain handles)</description>

<status>success</status>

<duration>57</duration>

<page>domain-config-handle</page>

<remarks>

<remark id="95" rating="1">Daan: "De correcte handle id's staan er niet tussen, dat is een foutje van mij, ze heten nu alleen anders, je ziet het vanzelf."</remark>

<remark id="96" rating="3">Ivy: "Dan moet je gewoon zo doen, en dan die zo." (SHE UNDERSTANDS!!)</remark>

</remarks>

</action>

<action id="58">

<description>Domain configuration (failed, she needs to add redirect but clicks to order checkout)</description>

<status>failed</status>

<duration>27</duration>

<page>domain-config-nameservers</page>

<remarks>

<remark id="98" rating="3">Ivy: "En dan die... waar moet dat e-mail adres naar toe" (referring to the redirect e-mail address)</remark>

<remark id="99" rating="3">*mumbling about she does not know where*</remark>

</remarks>

</action>

<action id="60">

<description>Domain configuration (adding redirect)</description>

<status>success</status>

<duration>33</duration>

<page>domain-config-nameservers</page>

<remarks>

</remarks>

</action>

<action id="62">

<description>Order checkout</description>

<status>success</status>

<duration>5</duration>

<page>checkout</page>

<remarks>

</remarks>

</action>

</actions>

</scenario>

<totalDuration>827</totalDuration>

</session>

<session id="9">

<user exp="NT">Martijn Stege</user>

<scenario id="6" name="NT-S1">

<actions>

<action id="7">

<description>Adding the three domain names to the order</description>

<status>success</status>

<duration>84</duration>

<page>domain-check</page>

<remarks>

<remark id="5" rating="3">'domain is not available' he immediately sees that the domain is not available</remark>

</remarks>

</action>

<action id="12">

<description>Entering the move token for the domain that is 'in use'</description>

<status>success</status>

<duration>19</duration>

<page>domain-config</page>

<remarks>

</remarks>

</action>

<action id="15">

<description>accepting the order in the order overview</description>

<status>success</status>

<duration>17</duration>

<page>checkout</page>

<remarks>

<remark id="15" rating="2">'ga akkoord met voorwaarden, maar niet gelezen' It's mandatory to read Terms and Conditions</remark>

</remarks>

</action>

</actions>

</scenario>

<totalDuration>120</totalDuration>

<scenario id="7" name="NT-S2">

<actions>

<action id="19">

<description>logging in as a with the given username and password</description>

<status>success</status>

<duration>35</duration>

<page>debtor-information</page>

<remarks>

<remark id="24" rating="1">'Ho, foutje gemaakt' (he made a typo Filling in username and pasword)</remark>

</remarks>

</action>

<action id="25">

<description>Add the domain exonetbv.com to shopping cart</description>

<status>success</status>

<duration>29</duration>

<page>domain-check</page>

<remarks>

</remarks>

</action>

<action id="26">

<description>Changing the 'termijn' of contract to 4 years</description>

<status>success</status>

<duration>5</duration>

<page>shopcart</page>

<remarks>

</remarks>

</action>

<action id="29">

<description>Fill in the token</description>

<status>success</status>

<duration>14</duration>

<page>domain-config</page>

<remarks>

<remark id="94" rating="3">He searches for the (change admin and owner fields) by moving the mousepointer around for a couple of seconds </remark>

</remarks>

</action>

<action id="33">

<description>Checking overview of order and accepting terms and conditions</description>

<status>success</status>

<duration>32</duration>

<page>checkout</page>

<remarks>

<remark id="51" rating="3">'maar nu weet ik niet wat nu de eigenaar is' ( he skips that part in the previous shopping cart overview, that part is not shown very obvious) User restarts the scenario.</remark>

</remarks>

</action>

<action id="37">

<description>Add the domain exonetbv.com to shopping cart for the second time</description>

<status>success</status>

<duration>55</duration>

<page>domain-check</page>

<remarks>

<remark id="56" rating="3">He looks for the field 'administrator' or 'owner' (it's not clear where he can find or fill in this info)</remark>

<remark id="92" rating="1">He has to log in again</remark>

</remarks>

</action>

<action id="43">

<description>Changing the 'termijn' of contract to 4 years for the second time</description>

<status>success</status>

<duration>9</duration>

<page>debtor-information</page>

<remarks>

<remark id="66" rating="3">'dit is duidelijk' ( the fact that he can't fill in any other info on that page )</remark>

</remarks>

</action>

<action id="46">

<description>Filling in the correct owner and administrator for the domain</description>

<status>success</status>

<duration>142</duration>

<page>domain-config-handle</page>

<remarks>

<remark id="78" rating="3">He has problems finding the correct button for executing this action</remark>

<remark id="84" rating="3">when changing the the owner of the domain, he has problems finding the edit button (the fields are not editable by default but you have to check the edit button)</remark>

<remark id="91" rating="3">When changing the administrator it is not very clear that he has to uncheck the button 'same as owner' and the option 'create a new domain handler'</remark>

</remarks>

</action>

<action id="47">

<description>Checking overview of order and accepting terms and conditions for the second time</description>

<status>success</status>

<duration>22</duration>

<page>checkout</page>

<remarks>

</remarks>

</action>

<action id="55">

<description>Filling in the move token for the second time</description>

<status>success</status>

<duration>18</duration>

<page>domain-config</page>

<remarks>

</remarks>

</action>

<action id="67">

<description>He clicks next, forgets changing the handlers</description>

<status>failed</status>

<duration>1</duration>

<page>domain-config-handle</page>

<remarks>

</remarks>

</action>

</actions>

</scenario>

<totalDuration>482</totalDuration>

<scenario id="8" name="NT-S3">

<actions>

<action id="59">

<description>Logging in with the given username and password</description>

<status>success</status>

<duration>11</duration>

<page>debtor-information</page>

<remarks>

<remark id="100" rating="2">No problems, has done this a couple of times now</remark>

</remarks>

</action>

<action id="61">

<description>Adding the domain ruresearch.nl to the shopping cart</description>

<status>success</status>

<duration>14</duration>

<page>domain-check</page>

<remarks>

<remark id="101" rating="2">No problems, has done this a couple of times now</remark>

<remark id="106" rating="2">'dit snap ik nu wel' ( he learned from previous mistakes)</remark>

</remarks>

</action>

<action id="63">

<description>Selecting the domain order period</description>

<status>success</status>

<duration>7</duration>

<page>shopcart</page>

<remarks>

<remark id="102" rating="2">No problems, has done this a couple of times now</remark>

</remarks>

</action>

<action id="64">

<description>Selecting the owner and admin for the domain</description>

<status>success</status>

<duration>50</duration>

<page>domain-config-handle</page>

<remarks>

<remark id="103" rating="3">No problems with selecting RAD007 and RAD008 as handles</remark>

<remark id="104" rating="3">'Dit is niet heel logisch' (it's a bit unclear where and why he has to uncheck the button 'same as owner' )</remark>

</remarks>

</action>

<action id="65">

<description>Selecting a redirect to www.ru.nl and the exonet e-mailadres</description>

<status>success</status>

<duration>39</duration>

<page>domain-config-nameservers</page>

<remarks>

<remark id="105" rating="3">he first opens the add redirect/nameservers option window and then closes it and then opens it again (the system shows the nameserver option by default, then you have to select the redirect button in the dropdownbox)</remark>

</remarks>

</action>

<action id="68">

<description>Accepting terms and conditions and finalize order.</description>

<status>success</status>

<duration>5</duration>

<page>checkout</page>

<remarks>

</remarks>

</action>

</actions>

</scenario>

<totalDuration>608</totalDuration>

</session>

|

Notes/problems we encountered

- During the analysis of the data we only transcribed en and defined actions that were relevant for the research.We excluded actions as : reading the scenario assignment.

- During the transcribing of the data, we forgot to mention the page on the website the Action was referring to. This was necessary to get a good overview of which page on the ordering site is giving problems and needs improvement. Thats why we added this option to the database and the transcribing Tool.

Conclusions

After executing the Think Aloud Protocol we analyzed the gathered data and generated statistics For we can answer our main research question we need to find answers to the following subquestions:

To what extent does the users computer experience influences the successfulness of ordering and configuring domain names?

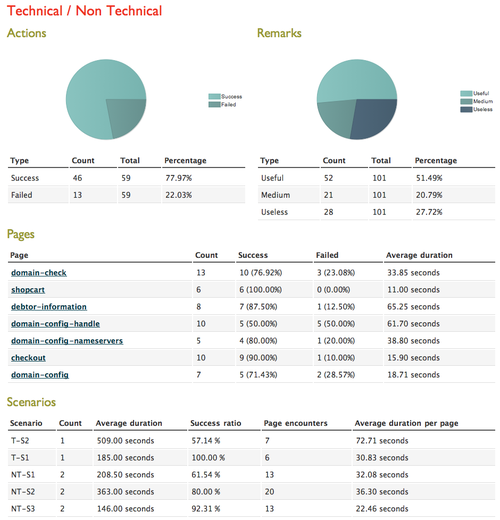

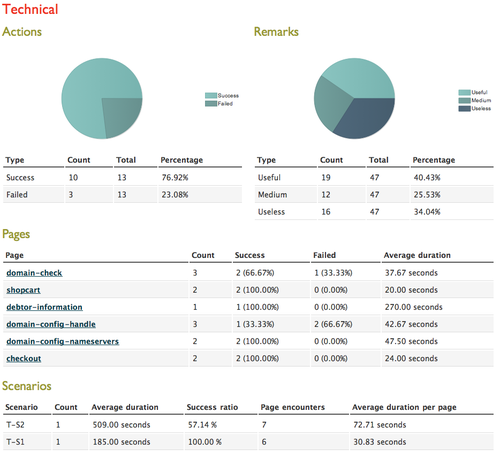

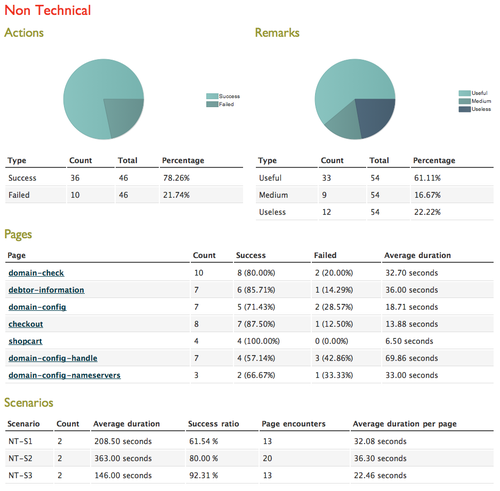

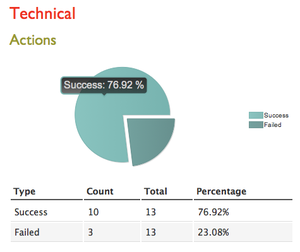

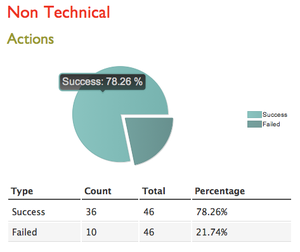

To support the answer to this question we refer to the images below. These images are screenshots from our tool.

Looking at the statistics shown above we find that the results are not representative for the real findings. In the reflection we will explain why these results are not representative for the real findings. The success rate of technical users (76,92%) is smaller than non-technical users (78,26%). The cause of these results is that the technical users only did one scenario instead of the originally planned three. The non-technical users executed all three scenarios. This shows (as expected) that the technical users have less problems using the system compared with the non-technical users. From the total of thirteen actions performed, ten where successful. Looking at the statistics from the non-technical users we see a higher rate of successfulness, this is because of the fact that they executed all three scenarios and therefor have experienced a learning process. The first scenario which they executed was less successful as now shown in the results.

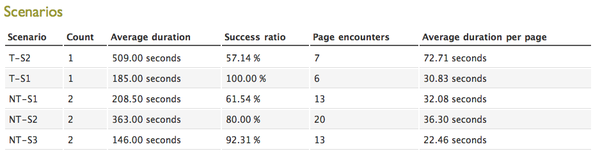

In the image above the scenarios T-S1 and T-S2 are executed only once, and NT-S1 is executed twice. The scenarios T-S1 and T-S2 was the first run for the technical users, so summing these results up we get a successful rate of 78,57% and an average duration per page of 88,13s. This duration is clearly more than the NT-S1 duration, but the T-S2 scenario is more complicated than the first. So the real results are as following:

- Non-technical users executed their first round with a total successful rate of 61,54%.

- Technical users executed their first round with a total successful rate of 78,57%.

So the computer experience has definitely influence on the successfulness of ordering and configuring domain names. Because looking only at the first scenario, the technical users did a lot better than the non-techincal users. On the other hand this confirms our expectation that the system is easy to learn and once executed users/customers know how to order and configure domains. This also means that some pages need improvement, to get the same results as technical users.

Which parts of the system cause problems or delays during the ordering and configuring of the domain names?

We found out that certain parts of the system cause problems and delays. This is derivable from the statistics shown in the figure 'General findings' below. As you can see there are a couple pages that generate a high percentage of failed actions. The remarks for each page with a high failure percentage are shown below.

| Click 'show' to view the relevant remarks and their actions → |

|---|

|

Domain config handle (50% Failed overall)

Remarks of Successfull actions

Action: Domain configuration (changing domain handles)

Action: Filling in the correct owner and administrator for the domain

Action: Selecting the owner and admin for the domain

Domain config (28,5% failed overall)

Action: Domain configuration (failed because she doesn't understand what to fill in the token field)

Success actions and relevant remarks

|

Is the system helpful and efficient enough for customers to configure and order domain names?

In short, no. While executing our scenarios we came to the conclusion that there are parts of the system which need quite some improvement. The following points need some extra attention.

- Domain check

- Users are not aware of the multiple domain selection tool

- Users try to change the time of registration (thus how many years) in this screen

- Some non technical users have no clue on what a transfer or a registration is.

- Shopcart

- Some users ask if they can change contacts for domains here, we think that the previous page should make clear where along the ordering process they can change these contacts.

- Debtor information

- There was a particular case where a user thought that he was editing contacts for domains here. After reviewing the system he can't be blamed. The page states nowhere that the form is about debtor details instead of domain details.

- Domain config

- To some users it was unclear what a token was. The page should tell this.

- One users noted that the token field is useless when there are only registrations involved, he's right.

- Domain config (handle)

- The page should state somewhere that handles are automatically created from the debtor details

- The difference between owner and admin contact is unclear.

- We found that roughly half of our test users have problems with selecting the right contacts for the right domains.

- Sometimes it's even unclear for them wether they are working with debtor details or contact details. The system should point out where, how and when they can configure these contacts and it simply does not.

- Domain config (nameservers)

- Some labels on buttons are a bit unclear, adding a new nameserver group which states 'save changes' instead of 'add group'

- Checkout

- No problems here

To improve the helpfulness and efficiency of the system Exonet should take a look at these remarks that the users made during the execution of the scenarios. We also advise Exonet to rerun the research and protocol but then based on another product like Virtual Server or Hostingpackages. This way more glitches in the system will show.

General Findings

Technical users

Non-Technical users

Reflection

We have learned quite a bit from this research and research method. We have made some mistakes which had a negative influence on our results. These mistakes are listed below.

- This research did not have any control group, which would have been essential for better and more valid results. Nevertheless it is a very small research, it should have contained two control groups. One representing the technical users and one representing the non-technical users. We divided and selected our test users on computer experience and created for those groups different scenarios. Each group had up to three scenarios to execute. As soon as we were analyzing our data and trying to make conclusions out of it, we found out that we missed two control groups. These groups would have executed the scenarios from the opposite experience level.

- Unfortunately there has been a slight miscommunication when executing our scenarios. The technical users only executed one scenario and the non-technical users have executed all three scenarios (which was the original plan). Therefor we are missing a lot of data for our technical users. This was a mistake, but it does not effect the research in many ways. Our conclusion exists of tips and comments for the development-team from Exonet. Still, for more tips and more accurate results it will be helpful for Exonet to conduct some extra sessions with more users of both groups.

- When organizing the data we found out that we missed a critical point in our data collection. While adding actions we decided it would come in handy if we could define the page of the action. This way we get better results because now we can see per page what goes ok and what goes wrong.

Besides these problems and mistakes we find the Think Aloud Protocol a very useful research method to gather data. The next time when executing this protocol we need to tell the users more specific to say more than they did in this research. We've gathered enough data for this small research, but more comments would have been useful.

Also we found out that the recording software which is integrated within QuickTime on a Mac (probably also on PC) works pretty good. It uses the built-in microphone and camera for recording. These pieces of hardware were exactly what we needed for this experiment.

Other Notes and remarks can be found in every paragraph of the chapter 'Execution'

Sources

http://www.useit.com/papers/heuristic/

http://ozm2.robbinjanssen.nl